* new CI config * initial Capacitron implementation * delete old unused file * fix empty formatting changes * update losses and training script * fix previous commit * fix commit * Add Capacitron test and first round of test fixes * revert formatter change * add changes to the synthesizer * add stepwise gradual lr scheduler and changes to the recipe * add inference script for dev use * feat: add posterior inference arguments to synth methods - added reference wav and text args for posterior inference - some formatting * fix: add espeak flag to base_tts and dataset APIs - use_espeak_phonemes flag was not implemented in those APIs - espeak is now able to be utilised for phoneme generation - necessary phonemizer for the Capacitron model * chore: update training script and style - training script includes the espeak flag and other hyperparams - made style * chore: fix linting * feat: add Tacotron 2 support * leftover from dev * chore:rename parser args * feat: extract optimizers - created a separate optimizer class to merge the two optimizers * chore: revert arbitrary trainer changes * fmt: revert formatting bug * formatting again * formatting fixed * fix: log func * fix: update optimizer - Implemented load_state_dict for continuing training * fix: clean optimizer init for standard models * improvement: purge espeak flags and add training scripts * Delete capacitronT2.py delete old training script, new one is pushed * feat: capacitron trainer methods - extracted capacitron specific training operations from the trainer into custom methods in taco1 and taco2 models * chore: renaming and merging capacitron and gst style args * fix: bug fixes from the previous commit * fix: implement state_dict method on CapacitronOptimizer * fix: call method * fix: inference naming * Delete train_capacitron.py * fix: synthesize * feat: update tests * chore: fix style * Delete capacitron_inference.py * fix: fix train tts t2 capacitron tests * fix: double forward in T2 train step * fix: double forward in T1 train step * fix: run make style * fix: remove unused import * fix: test for T1 capacitron * fix: make lint * feat: add blizzard2013 recipes * make style * fix: update recipes * chore: make style * Plot test sentences in Tacotron * chore: make style and fix import * fix: call forward first before problematic floordiv op * fix: update recipes * feat: add min_audio_len to recipes * aux_input["style_mel"] * chore: make style * Make capacitron T2 recipe more stable * Remove T1 capacitron Ljspeech * feat: implement new grad clipping routine and update configs * make style * Add pretrained checkpoints * Add default vocoder * Change trainer package * Fix grad clip issue for tacotron * Fix scheduler issue with tacotron Co-authored-by: Eren Gölge <egolge@coqui.ai> Co-authored-by: WeberJulian <julian.weber@hotmail.fr> Co-authored-by: Eren Gölge <erogol@hotmail.com> |

||

|---|---|---|

| .github | ||

| TTS | ||

| docs | ||

| images | ||

| notebooks | ||

| recipes | ||

| tests | ||

| .cardboardlint.yml | ||

| .dockerignore | ||

| .gitignore | ||

| .pre-commit-config.yaml | ||

| .pylintrc | ||

| .readthedocs.yml | ||

| CITATION.cff | ||

| CODE_OF_CONDUCT.md | ||

| CODE_OWNERS.rst | ||

| CONTRIBUTING.md | ||

| Dockerfile | ||

| LICENSE.txt | ||

| MANIFEST.in | ||

| Makefile | ||

| README.md | ||

| hubconf.py | ||

| pyproject.toml | ||

| requirements.dev.txt | ||

| requirements.notebooks.txt | ||

| requirements.txt | ||

| run_bash_tests.sh | ||

| setup.cfg | ||

| setup.py | ||

README.md

🐸TTS is a library for advanced Text-to-Speech generation. It's built on the latest research, was designed to achieve the best trade-off among ease-of-training, speed and quality. 🐸TTS comes with pretrained models, tools for measuring dataset quality and already used in 20+ languages for products and research projects.

📰 Subscribe to 🐸Coqui.ai Newsletter

📢 English Voice Samples and SoundCloud playlist

📄 Text-to-Speech paper collection

💬 Where to ask questions

Please use our dedicated channels for questions and discussion. Help is much more valuable if it's shared publicly so that more people can benefit from it.

| Type | Platforms |

|---|---|

| 🚨 Bug Reports | GitHub Issue Tracker |

| 🎁 Feature Requests & Ideas | GitHub Issue Tracker |

| 👩💻 Usage Questions | Github Discussions |

| 🗯 General Discussion | Github Discussions or Gitter Room |

🔗 Links and Resources

| Type | Links |

|---|---|

| 💼 Documentation | ReadTheDocs |

| 💾 Installation | TTS/README.md |

| 👩💻 Contributing | CONTRIBUTING.md |

| 📌 Road Map | Main Development Plans |

| 🚀 Released Models | TTS Releases and Experimental Models |

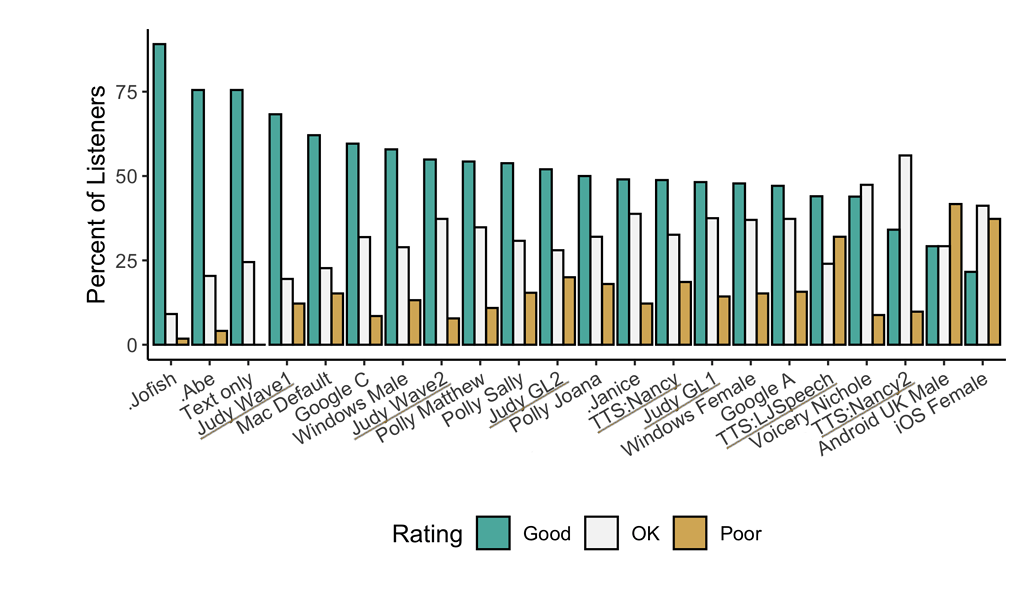

🥇 TTS Performance

Underlined "TTS*" and "Judy*" are 🐸TTS models

Features

- High-performance Deep Learning models for Text2Speech tasks.

- Text2Spec models (Tacotron, Tacotron2, Glow-TTS, SpeedySpeech).

- Speaker Encoder to compute speaker embeddings efficiently.

- Vocoder models (MelGAN, Multiband-MelGAN, GAN-TTS, ParallelWaveGAN, WaveGrad, WaveRNN)

- Fast and efficient model training.

- Detailed training logs on the terminal and Tensorboard.

- Support for Multi-speaker TTS.

- Efficient, flexible, lightweight but feature complete

Trainer API. - Released and ready-to-use models.

- Tools to curate Text2Speech datasets under

dataset_analysis. - Utilities to use and test your models.

- Modular (but not too much) code base enabling easy implementation of new ideas.

Implemented Models

Text-to-Spectrogram

- Tacotron: paper

- Tacotron2: paper

- Glow-TTS: paper

- Speedy-Speech: paper

- Align-TTS: paper

- FastPitch: paper

- FastSpeech: paper

End-to-End Models

- VITS: paper

Attention Methods

- Guided Attention: paper

- Forward Backward Decoding: paper

- Graves Attention: paper

- Double Decoder Consistency: blog

- Dynamic Convolutional Attention: paper

- Alignment Network: paper

Speaker Encoder

Vocoders

- MelGAN: paper

- MultiBandMelGAN: paper

- ParallelWaveGAN: paper

- GAN-TTS discriminators: paper

- WaveRNN: origin

- WaveGrad: paper

- HiFiGAN: paper

- UnivNet: paper

You can also help us implement more models.

Install TTS

🐸TTS is tested on Ubuntu 18.04 with python >= 3.7, < 3.11..

If you are only interested in synthesizing speech with the released 🐸TTS models, installing from PyPI is the easiest option.

pip install TTS

If you plan to code or train models, clone 🐸TTS and install it locally.

git clone https://github.com/coqui-ai/TTS

pip install -e .[all,dev,notebooks] # Select the relevant extras

If you are on Ubuntu (Debian), you can also run following commands for installation.

$ make system-deps # intended to be used on Ubuntu (Debian). Let us know if you have a diffent OS.

$ make install

If you are on Windows, 👑@GuyPaddock wrote installation instructions here.

Use TTS

Single Speaker Models

-

List provided models:

$ tts --list_models -

Run TTS with default models:

$ tts --text "Text for TTS" -

Run a TTS model with its default vocoder model:

$ tts --text "Text for TTS" --model_name "<language>/<dataset>/<model_name> -

Run with specific TTS and vocoder models from the list:

$ tts --text "Text for TTS" --model_name "<language>/<dataset>/<model_name>" --vocoder_name "<language>/<dataset>/<model_name>" --output_path -

Run your own TTS model (Using Griffin-Lim Vocoder):

$ tts --text "Text for TTS" --model_path path/to/model.pth --config_path path/to/config.json --out_path output/path/speech.wav -

Run your own TTS and Vocoder models:

$ tts --text "Text for TTS" --model_path path/to/config.json --config_path path/to/model.pth --out_path output/path/speech.wav --vocoder_path path/to/vocoder.pth --vocoder_config_path path/to/vocoder_config.json

Multi-speaker Models

-

List the available speakers and choose as <speaker_id> among them:

$ tts --model_name "<language>/<dataset>/<model_name>" --list_speaker_idxs -

Run the multi-speaker TTS model with the target speaker ID:

$ tts --text "Text for TTS." --out_path output/path/speech.wav --model_name "<language>/<dataset>/<model_name>" --speaker_idx <speaker_id> -

Run your own multi-speaker TTS model:

$ tts --text "Text for TTS" --out_path output/path/speech.wav --model_path path/to/config.json --config_path path/to/model.pth --speakers_file_path path/to/speaker.json --speaker_idx <speaker_id>

Directory Structure

|- notebooks/ (Jupyter Notebooks for model evaluation, parameter selection and data analysis.)

|- utils/ (common utilities.)

|- TTS

|- bin/ (folder for all the executables.)

|- train*.py (train your target model.)

|- distribute.py (train your TTS model using Multiple GPUs.)

|- compute_statistics.py (compute dataset statistics for normalization.)

|- ...

|- tts/ (text to speech models)

|- layers/ (model layer definitions)

|- models/ (model definitions)

|- utils/ (model specific utilities.)

|- speaker_encoder/ (Speaker Encoder models.)

|- (same)

|- vocoder/ (Vocoder models.)

|- (same)